#libarchive 3.7.9 has been released (#MultiFormatArchive / #CompressionLibrary / #FileArchiver / #DataCompression / #7Zip / #7z / #RAR / #ZIP / #GZip / #TAR / #XAR / #WARC / #BZIP2 / #XZ) https://www.libarchive.org/

Recent searches

Search options

#warc

I've mirrored a relatively simple website (redsails.org; it's mostly text, some images) for posterity via #wget. However, I also wanted to grab snapshots of any outlinks (of which there are many, as citations/references). By default, I couldn't figure out a configuration where wget would do that out of the box, without endlessly, recursively spidering the whole internet. I ended up making a kind-of poor man's #ArchiveBox instead:

for i in $(cat others.txt) ; do dirname=$(echo "$i" | sha256sum | cut -d' ' -f 1) ; mkdir -p $dirname ; wget --span-hosts --page-requisites --convert-links --backup-converted --adjust-extension --tries=5 --warc-file="$dirname/$dirname" --execute robots=off --wait 1 --waitretry 5 --timeout 60 -o "$dirname/wget-$dirname.log" --directory-prefix="$dirname/" $i ; done

Basically, there's a list of bookmarks^W URLs in others.txt that I grabbed from the initial mirror of the website with some #grep foo. I want to do as good of a mirror/snapshot of each specific URL as I can, without spidering/mirroring endlessly all over. So, I hash the URL, and kick off a specific wget job for it that will span hosts, but only for the purposes of making the specific URL as usable locally/offline as possible. I know from experience that this isn't perfect. But... it'll be good enough for my purposes. I'm also stashing a WARC file. Probably a bit overkill, but I figure it might be nice to have.

Prawdopodobnie DeepSeek zna Wasze sekrety oraz klucze API ;)

Przeszukiwanie zasobów w poszukiwaniu kluczy API nie jest nowatorską praktyką i bywa bardzo często wykorzystywane przez atakujących na etapie rekonesansu. Powstały liczne narzędzia, które potrafią przeszukiwać np. repozytoria kodu (np. na GitHubie). Sytuacje, w których produkcyjne poświadczenia trafiają do publicznych baz danych nie są wyjątkiem, dlatego nic dziwnego, że badacze...

#WBiegu #Ai #Deepseek #Llm #UczenieMaszynowe #WARC

https://sekurak.pl/prawdopodobnie-deepseek-zna-wasze-sekrety-oraz-klucze-api/

I've started experimenting with #archiving the content I reference from my site and linking it from there to prevent information rot in my site. So far I'm using #ArchiveBox , but it has some warts that I don't like. For example it doesn't have an option to archive full youtube channels, and the #crawling is very barebones (if I want to archive a full page for example).

What other solutions have you found?

I'm seeing the trade-off between citability and comprehensiveness in this approach. #WARC-GPT builds a bunch of embeddings ("3624 embeddings from 1296 HTML/PDF records" in this case). and uses them (have I got this right?) to do a first pass, figuring out which files are good matches to a query. It then packages my query and the best file as a query to an #LLM (Mistral:latest running in #Ollama in this case), and shows me the result. So the answer is quite good for info in the file it selected.

Quicker, better, robuster,... this is ZIMit 2.0! Our scraper able to make an offline version of any Web site is only a few days away from its release! Stay tuned! https://github.com/openzim/zimit #webscraping #webarchiving #zim #offline #kiwix #warc

Open Measures; Webhook.Site; Wget WARC-Style

Today’s edition is all about data, whether it be about what’s happening on smaller content platforms – like Bluesky and Mastodon — or, receiving data from apps and APIs, or creating content collections for analysis.

TL;DR

(This is an AI-generated summary of today’s Drop.)

(Perplexity is back to not giving links…o_O)

- Open Measures: A data-driven platform designed to combat online extremism and disinformation by providing journalists, researchers, and organizations with tools and data to investigate harmful activities. The platform tracks a wide array of sites, especially those on the fringes of the internet, and offers a robust API for integrating its data into in-house dashboards for ongoing investigations.

- Webhook.Site: An online tool that provides a unique, random URL (and email address) for testing webhooks or arbitrary HTTP requests. It displays requests in real-time for inspection without needing own server infrastructure. The site also features a custom graphical editor and scripting language for processing HTTP requests, making it useful for connecting incompatible APIs or quickly building new ones.

- Wget WARC-Style: An introduction to using Wget for creating web archive (WARC) files, a standardized format for archiving web content including HTML, CSS, JavaScript, and digital media, along with metadata about the retrieval process. Wget’s built-in support for WARC format allows for straightforward archiving of websites, with options for compression and extensive archiving like capturing an entire website.

Open Measures

Prelude: I feel so daft for not knowing about this site until yesterday.

I know each and every reader of the Drop groks that disinformation and extremism are yuge problems. While there are definitely guardians out there who help track this activity and thwart the plans of those that seek to do harm. There just aren’t enough of them. But, it turns out, we can all get some telemetry on these activities and, perhaps, do our part, even in some small way.

Open Measures is a data-driven site that lets us all battle online extremism and disinformation. The platform is designed to empower journalists, researchers, and organizations dedicated to social good, enabling them to delve into and investigate these harmful activities. With a focus on transparency and cooperation, Open Measures offers a suite of tools that are well-integrated and open source, along with incredibly cool data. The team behind it works super hard to ensure that both the tools and data are accessible to all who wish to use them for public benefit.

The tool tracks a wide array of sites, particularly those on the fringes of the internet, where extremism and disinformation tend to proliferate. By providing access to hundreds of millions of data points across these fringe social networks, Open Measures equips users with the necessary data to uncover and analyze trends related to hate, misinformation, and disinformation.

They have a great search tool and API. The API enables folks to bring Open Measures data into their own in-house dashboards and plug into the platform to augment their ongoing investigations. This feature is especially useful for modern threat intelligence operations that require systems to communicate seamlessly with one another. The API, while robust, is (rightfully so) rate-limited to mitigate risks from malicious actors. However, for users who find the Public API insufficient for their needs, Open Measures offers Pro or Enterprise functionality, which may better suit their requirements.

The need for and utility of Open Measures cannot be overstated. On a daily basis, we all witness the real-world consequences of online disinformation and extremism. Having tools that can sift through scads of data to identify harmful content is invaluable. For researchers and journalists, this means being able to trace and piece together online threats with greater efficiency. For social good organizations, it means having the resources to combat disinformation and protect communities from harm.

Now, while it’s an essential tool for this malicious activity, the site is — fundamentally — indexing the public content on the listed network. That means you can use it for arbitrary queries, such as checking out how often the term “bridge” was seen on, say, Bluesky and Mastodon in the past month (ref: section header images). (For those still unawares, there’s yet-another firestorm on Mastodon related to a new protocol bridge being built between it and Bluesky.)

Webhook.Site

I needed to test out a webhook for something last week and hit up my Raindrop.io bookmarks to see what I used last time, and was glad to see that Webhook.site was still around and kicking!

If you ever have a need to get the hang of a new webhook (or arbitrary HTTP request) or test out what you might want do do in response to an email, you need to check this site out. Putting it simply, Webhook.site (I do dislike product names that are also domain names) gives you a unique, random URL (and, as noted, even an email address) to which you can send HTTP requests and emails. These requests are displayed in real-time, which lets us inspect their content without the need to set up and maintain our own server infrastructure. This feature is incredibly useful for testing webhooks, debugging HTTP requests, and even creating workflows. Said workflows can be wrangled through the site’s custom graphical editor or using their scripting language to transform, validate, and process HTTP requests in various ways.

Webhook.site can also act as an intermediary (i.e., proxying requests) so you can see what was sent in the past, transforming webhooks into other formats, and re-sending them to different systems. This makes it a pretty useful tool for connecting APIs that aren’t natively compatible, or for building APIs quickly without needing to set up infrastructure from scratch.

The external service comes with a great CLI tool, which can be used as a receptor of forwarded requests or execute commands. Their documentation is also exceptional.

However, while this service offers a plethora of benefits and is super easy to use, it’s important to exercise caution when deciding what you hook up to it. Webhooks, by their nature, often involve sending data to a public endpoint on the internet. Without proper security measures, this could potentially expose sensitive data to unauthorized parties. As an aside, I’m really not sure you should be using any service that transmits sensitive information over a webhook. Just make sure you go into the use of a third-party site, such as this, with eyes open and with deliberate consideration.

The section header is a result of this call:

curl -X POST 'https://webhook.site/U-U-I-D' \ -H 'content-type: application/json' \ -d $'{"message": "Hey there Daily Drop readers!"}' Wget WARC-Style

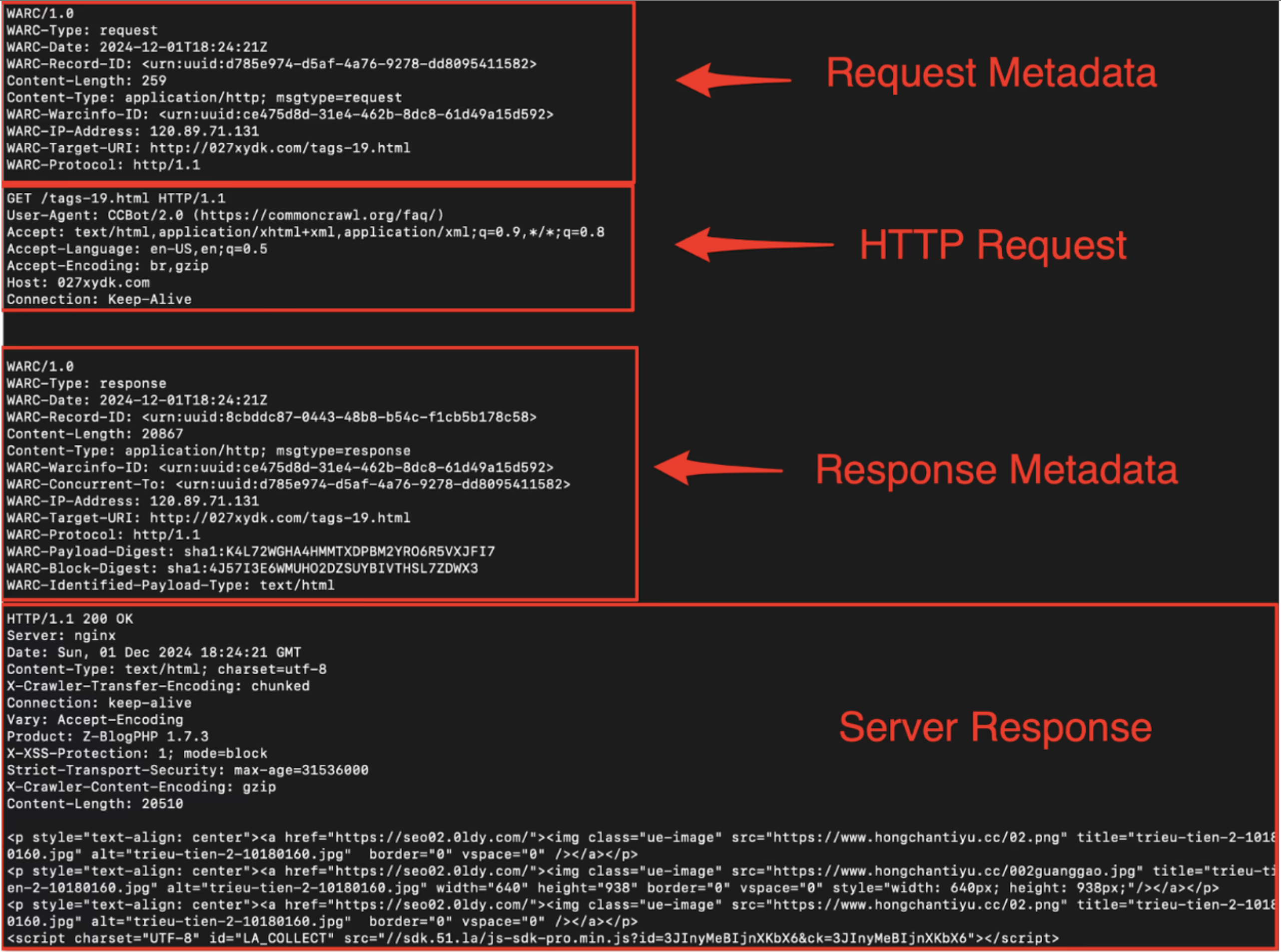

In preparation for one section coming tomorrow, I wanted to spread some scraping (it is Valentine’s Day, after all) to wget. The curl utility and library gets most of the ahTTpention these days, but there are things it just cannot do (because it was not designed to do them). One of these things is creating web archive (WARC) files.

These files are in a standardized format used for archiving all web content, including the HTML, CSS, JavaScript, and digital media of web pages, along with the metadata about the retrieval process. This makes WARC an ideal format for digital preservation efforts or for anyone looking to capture and store web content for future reference.

Creating WARC files with Wget is straightforward, thanks to its built-in support for the format. To start archiving a website, you simply need to use the --warc-file option followed by a filename for your archive. If you use Wget’s --input-file option, you can save a whole collection of sites into one WARC file. For example:

wget --input-file=urls.txt --warc-file="research-collection"

This command downloads the website and saves the content into a WARC file named research-collection.warc.gz. The .gz extension indicates that the file is compressed with gzip, which is Wget’s default behavior to save space. If you prefer to have an uncompressed WARC file, you can add the --no-warc-compression option to your command.

For more extensive archiving, such as capturing an entire website, you can combine the --warc-file option with Wget’s --mirror option. This tells Wget to recursively download the website, preserving its directory structure and including all pages and resources. This approach ensures that you get a complete snapshot of the site at the time of download, stored in a series of WARC files. Wget automatically splits the archive into multiple files if needed, appending a sequence number to each file’s name.

We’ll be using this technique, tomorrow, to explore a new tool that can operate on WARC files, so I wanted to make sure y’all had time to check this out before we tap into that content.

FIN

Remember, you can follow and interact with the full text of The Daily Drop’s free posts on Mastodon via @dailydrop.hrbrmstr.dev@dailydrop.hrbrmstr.dev

Wow! #TIL about #ArchiveBox, your #selfhosted #alternativeTo @internetarchive!

Runs on #Python (OS-packaged or #dockered) and saves both single pages or whole website crawls in every format you could wish for:

self-contained single-page HTML

PDF

PNG screenshot

plaintext

DOM-dump

priv./publ. #archive

media audio/video included (+yt-dlp)

#WARC compat.

https://archivebox.io

https://github.com/ArchiveBox/ArchiveBox

https://demo.archivebox.io

L'archivage du web est un jeu d'enfant avec https://webrecorder.net/

Format compatible #WARC

Diese Woche widmen wir uns im #DigitalHistoryOFK gemeinsam mit Annabel Walz (Friedrich-Ebert-Stiftung) dem komplexen Thema der Webarchivierung. Aus gedächtnisinstitutioneller Perspektive wird sie die Eigenschaften von #borndigital & #reborndigital Quellen, aber auch Best Practices für ihre Archivierung diskutieren, die auf #WebCrawling als Praktik & #WARC als Speicherformat setzen.

Mi, 29. Nov., 4-6 pm - via Zoom

Got productive an hour earlier than usual today. :P Feels weird to be off by one in the other direction for once.

This week is going to be focusing on roadmapping #DistributedPress and the #ActivityPub integration there, and testing the #WARC file chunking for #IPFS in @webrecorder

A very nice contribution to #StormCrawler improving the generation of #WARC files

Bookmark: What’s Cached is Prologue: Reviewing Recent Web Archives Research Towards Supporting Scholarly Use https://tspace.library.utoronto.ca/bitstream/1807/89426/1/Maemura%20AM2018%20Paper-Postprint.pdf the title #archive #webarchive #warc