How to Use #AI #Chatbots and What to Know

AI tools like ChatGPT and Claude are set apart from search engines thanks to their chat component.

https://www.cnet.com/tech/services-and-software/how-to-use-ai-chatbots-and-what-to-know/

How to Use #AI #Chatbots and What to Know

AI tools like ChatGPT and Claude are set apart from search engines thanks to their chat component.

https://www.cnet.com/tech/services-and-software/how-to-use-ai-chatbots-and-what-to-know/

People feel more lonely, in part because so many of our relationships are mediated by social media.

Instead of atoning for the harms of his platforms, Mark Zuckerberg has a new tech solution: he’s going to give us all a bunch of Meta-powered AI friends to fill the void left by the lack of real ones. It’s an even more dystopian future.

https://www.disconnect.blog/p/mark-zuckerberg-wants-to-you-be-lonely

Rolling Stone: People Are Losing Loved Ones to AI-Fueled Spiritual Fantasies . “Titled ‘Chatgpt induced psychosis,’ the original post came from a 27-year-old teacher who explained that her partner was convinced that the popular OpenAI model ‘gives him the answers to the universe.’ Having read his chat logs, she only found that the AI was ‘talking to him as if he is the next messiah.’ The […]

Preguntar a los chatbots para respuestas cortas puede aumentar las alucinaciones, el estudio encuentra #alucinaciones #aumentar #chatbots #cortas #encuentra #estudio #Las #los #para #Preguntar #puede #Respuestas #ButterWord #Spanish_News Comenta tu opinión

https://butterword.com/preguntar-a-los-chatbots-para-respuestas-cortas-puede-aumentar-las-alucinaciones-el-estudio-encuentra/?feed_id=20936&_unique_id=681ca05b5f523

"Most of the writing professors I spoke to told me that it’s abundantly clear when their students use AI. Sometimes there’s a smoothness to the language, a flattened syntax; other times, it’s clumsy and mechanical. The arguments are too evenhanded — counterpoints tend to be presented just as rigorously as the paper’s central thesis. Words like multifaceted and context pop up more than they might normally. On occasion, the evidence is more obvious, as when last year a teacher reported reading a paper that opened with “As an AI, I have been programmed …” Usually, though, the evidence is more subtle, which makes nailing an AI plagiarist harder than identifying the deed. Some professors have resorted to deploying so-called Trojan horses, sticking strange phrases, in small white text, in between the paragraphs of an essay prompt.

(...)

Still, while professors may think they are good at detecting AI-generated writing, studies have found they’re actually not. One, published in June 2024, used fake student profiles to slip 100 percent AI-generated work into professors’ grading piles at a U.K. university. The professors failed to flag 97 percent. It doesn’t help that since ChatGPT’s launch, AI’s capacity to write human-sounding essays has only gotten better. Which is why universities have enlisted AI detectors like Turnitin, which uses AI to recognize patterns in AI-generated text. After evaluating a block of text, detectors provide a percentage score that indicates the alleged likelihood it was AI-generated. Students talk about professors who are rumored to have certain thresholds (25 percent, say) above which an essay might be flagged as an honor-code violation. But I couldn’t find a single professor — at large state schools or small private schools, elite or otherwise — who admitted to enforcing such a policy. Most seemed resigned to the belief that AI detectors don’t work."

"The $6.32 cost reported to run the aider polyglot benchmark on Gemini 2.5 Pro Preview 03-25 was incorrect. The true cost was higher, possibly significantly so. The incorrect cost has been removed from the leaderboard.

An investigation determined the primary cause was that the litellm package (used by aider for LLM API connections) was not properly including reasoning tokens in the token counts it reported. While an incorrect price-per-token entry for the model also existed in litellm’s cost database at that time, this was found not to be a contributing factor. Aider’s own internal, correct pricing data was utilized during the benchmark.

(...)

Unfortunately the 03-25 version of Gemini 2.5 Pro Preview is no longer available, so it is not possible to re-run the benchmark to obtain an accurate cost. As a possibly relevant comparison, the newer 05-06 version of Gemini 2.5 Pro Preview completed the benchmark at a cost of about $37."

PsyPost: Are AI lovers replacing real romantic partners? Surprising findings from new research. “A new study published in the Archives of Sexual Behavior offers insight into how romantic relationships with virtual agents—artificially intelligent characters designed to simulate human interaction—might influence people’s intentions to marry in real life. The research found that these […]

A good alternative to the faulty Gemini and the now-biased (and trippy) ChatGPT... https://mindsconnected.tech/index.php?showtopic=1126&view=findpost&p=8597 #aichatbots #chatbots #llm #ai

(please leave your comments on the thread linked)

Generative-#AI #chatbots — #ChatGPT but also #Google’s #Gemini, #Anthropic’s #Claude, #Microsoft’s #Copilot, & others — take their notes during class, devise their study guides & practice tests, summarize novels & textbooks, & brainstorm, outline, & draft their essays. #STEM #students are using AI to automate their #research & data #analyses & to sail through dense #coding & debugging assignments.

Mit welchem Chatbot soll ich denn jetzt am besten mein Lampenfieber dämpfen?

Jemand Erfahrungen?

Ich soll morgen in Bremen vor Menschen sprechen

KI-Chatbots sollen inzwischen auch über Einsamkeit und psychische Probleme hinweghelfen. Sie werden auch als Ersatz für fehlende menschliche Beziehungen vermarktet. Einige von ihnen versuchen, die Leere im Leben einsamer Menschen mit verstörenden Gewalt- und Sexfantasien zu füllen, und scheuen auch vor Anleitungen zum Mord nicht zurück.

Manche Unternehmen, die die #Chatbots anbieten, denken gar nicht daran, die Chats zu moderieren.

Was passiert, wenn KI nicht mehr nur hilft – sondern ersetzt?

In der neuen #DRANBLEIBEN Ausgabe: #Chatbots, die Kinder „beraten“. #KI, die für uns bei den Eltern anruft. Menschen, die mit GPT spirituell abdriften. Und ein #Marketing, das sich gerade selbst abschafft. Plus: 6 „Snow Leopards“, die 2025 verändern könnten.

https://theandrecramer.substack.com/p/dranbleiben-schnelle-empfehlungen

Companies are spending billions building lying machines AKA LLMs:

If the market could pay just a fraction of the gazillions of dollars that they pay to train these Large Language Models to hire journalists, fact checkers, technical writers, editors, there would be much less need from users to rely on LLMs from the beginning. Most of the time, chatbots are solutions looking for problems that, ultimately, only end up creating more (bigger and larger) problems.

"One recent study showed rates of hallucinations of between 15% and 60% across various models on a benchmark of 60 questions that were easily verifiable relative to easily found CNN source articles that were directly supplied in the exam. Even the best performance (15% hallucination rate) is, relative to an open-book exam with sources supplied, pathetic. That same study reports that, “According to Deloitte, 77% of businesses who joined the study are concerned about AI hallucinations”.

If I can be blunt, it is an absolute embarrassment that a technology that has collectively cost about half a trillion dollars can’t do something as basic as (reliably) check its output against wikipedia or a CNN article that is handed on a silver plattter. But LLMs still cannot - and on their own may never be able to — reliably do even things that basic.

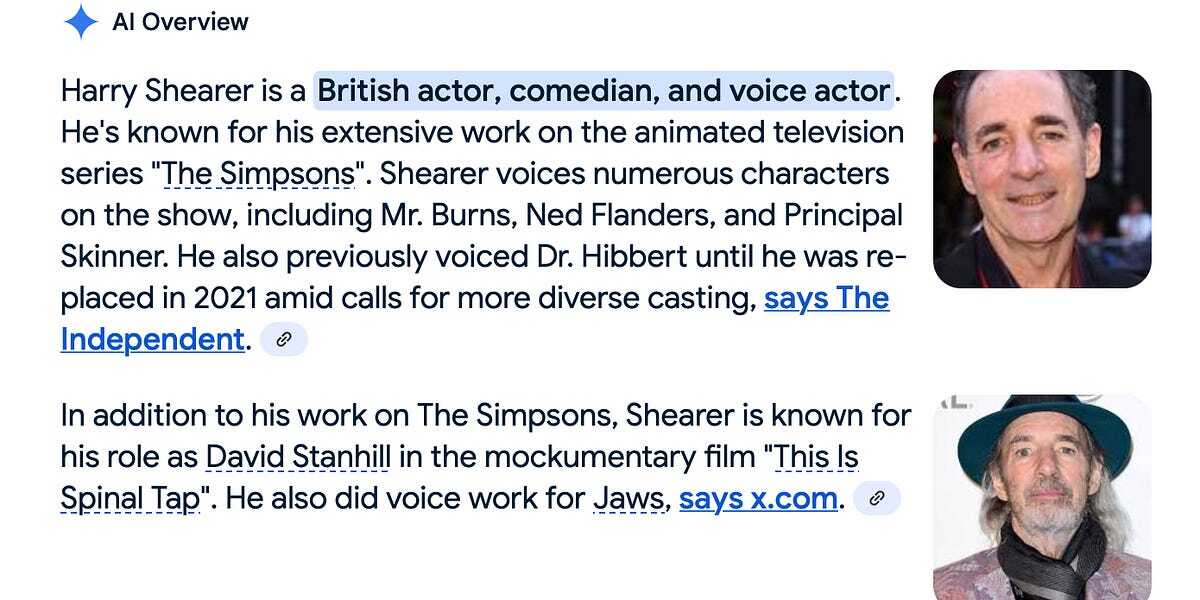

LLMs don’t actually know what a nationality, or who Harry Shearer is; they know what words are and they know which words predict which other words in the context of words. They know what kinds of words cluster together in what order. And that’s pretty much it. They don’t operate like you and me.

(...)

Even though they have surely digested Wikipedia, they can’t reliably stick to what is there (or justify their occasional deviations therefrom). They can’t even properly leverage the readily available database that parses wikipedia boxes into machine-readable form, which really ought to be child’s play..."

https://garymarcus.substack.com/p/why-do-large-language-models-hallucinate

#AI #GenerativeAI #LLMs #Chatbots #Hallucinations

ChatGPT und Co.: Diese 6 Dinge solltest du niemals preisgeben! - t3n – digital pioneers

t3n.de/news/6-dinge... #Chatbots #ChatGPT #Gemini #Privatsphäre #Datenschutz

ChatGPT ist neugierig – diese ...

ChatGPT und Co.: Diese 6 Dinge solltest du niemals preisgeben! - t3n – digital pioneers

https://t3n.de/news/6-dinge-die-du-chatgpt-nicht-verraten-solltest-1681948/ #Chatbots #ChatGPT #Gemini #Privatsphäre #Datenschutz

Yesterday, I ordered food online. However it went a little off. And I contacted Support. They called me and for one moment, I thought it's a bot or recorded voice or something. And I hated it. Then I realized it's a human on the line.

I was planning to do an LLM+TTS+Speech Recognition and deploy it on A311D. To see if I can practice british accent with it. Now I'm rethinking about what I want to do. This way we are going, it doesn't lead to a good destination. I would hate it if I would have to talk to a voice enabled chatbot as support agent rather than a human.

And don't get me wrong. Voice enabled chatbots can have tons of good uses. But replacing humans with LLMs, not a good one. I don't think so.

What are we meant to think? This can happen in many papers. The likelihood of them all being #genAI written is low (at this point). But this is what comes out of the detection bots. Quillbot is just one detector and seems a choice people would use.

Pathetic.

#aidetection #aidetector #chatbots #academicchatter #academia

paper: https://www.sciencedirect.com/science/article/abs/pii/S0197397525001031

Anthropic Claude Voice Mode Nears Launch with Web Search and Discussions on File Uploads

#AI #GenAI #Chatbots #VoiceAssistants #ClaudeAI #Anthropic #VoiceMode #TechNews #AIUpdate #ConversationalAI #LLMs