#PennedPossibilities 649 2/2 — What research did you conduct for your WIP, and did you uncover anything surprising or fascinating?

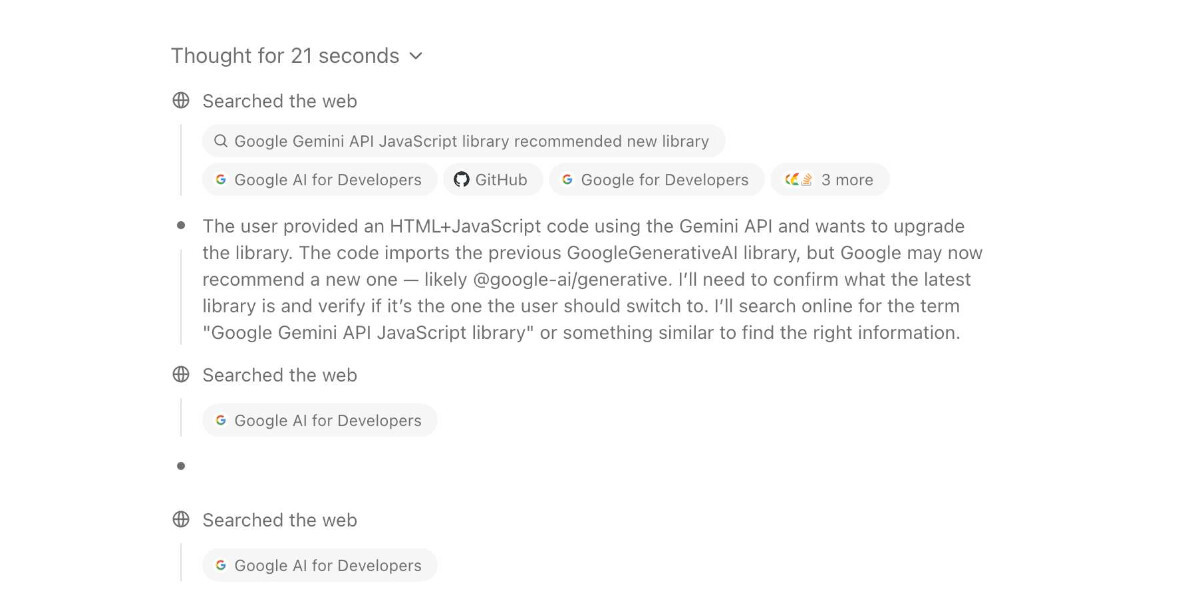

This answer, however, should interest any authors wanting to learn something from another authors' search behavior. I finally got completely feed up with the substandard results from DuckDuckGo and the on again off again AI search creeping into Google results, even with &udm=14.

A few days ago I decided to research paid search. All FREE search, it goes without saying, monetizes your behavior, time, or attention, so I understand it isn't free. How much do you make per hour? When I search for anything that could be construed as a product or service someone could SELL, it's impossible to find answers. Look for words for describing how to rock a baby, for example. I'm sure you've a slew of searches you've given up on.

I am trialing kagi.com. I am NOT advertising it; I'm not endorsing it. I've only tried two searches of the 100 allocated me so far. However, those two have been so full of useful results that I'm still mining them the next day.

I'll report back after I use it more.

[Author retains copyright (c)2025 R.S.]

#BoostingIsSharing

#gender #fiction #writer #author

#writing #writingcommunity #writersOfMastodon #writers

#RSdiscussion

#seach #kagi #google #duckDuckGo #ai #aisearch